Implementing AI in GMP: Key Takeaways from the EU’s Annex 22 Guideline

Artificial Intelligence (AI) is no longer science fiction, it’s already helping pharmaceutical companies to analyze data, optimize manufacturing, and improve quality control [1]. But until now, regulators have stayed quiet on how AI fits into the rules that govern GMP systems. The integration of Artificial Intelligence (AI) in pharmaceutical manufacturing has led regulatory authorities to proactively establish guidelines for its compliant use under Good Manufacturing Practice (GMP) standards. Recently, EMA has released a new Annex 22: Artificial Intelligence, the first official EU GMP guidance on AI use. This annex doesn’t just acknowledge AI, but it also sets out how it can (and cannot) be used in regulated environments[2] .

Let’s explore what Annex 22 means, why it matters, and how manufacturers can start preparing.

Why Annex 22?

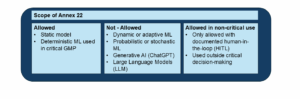

Annex 11 has long been the go-to document for computerized systems under GMP, but it was written before the rise of modern AI and machine learning (ML)[3]. Annex 22 bridges that gap and it provides a structured, risk-based framework for AI models used in critical GMP applications that could directly affect patient safety, product quality, or data integrity. In short, it ensures innovation doesn’t come at the expense of control. The scope of Annex 22 is elaborated in the figure below (1).

Figure 1: Overview of Annex 22 scope: classification of what is allowed, not allowed, or restricted to critical use.

Core Principles: People, Documentation, and Risk

Annex 22 stays true to GMP’s familiar pillars: qualified personnel, strong documentation, and risk-based control.

- Collaboration is key: AI projects need input from QA, IT, data scientists, and process SMEs. Everyone involved must understand both the model and its impact on the process.

- Documentation matters: Whether developed in-house or supplied externally, all training, validation, and testing activities must be recorded and reviewable.

- Risk-based approach: The depth of validation and oversight should depend on the potential impact on product quality, data integrity, or patient safety.

These are not new ideas Annex 22 simply applies existing GMP logic to a new technology. The lifecycle of AI is described in Figure (2).

Figure 2: The six key stages of the AI lifecycle as Outlined in EMA Annex 22.

Defining Intended Use

Every AI model must have a clearly documented intended use such as what it does, what data it uses, and under what conditions. Any limitations or sources of bias need to be identified early on, and the intended use should be reviewed and approved before testing begins. Where it makes sense, data should be grouped into sub-groups (for example, by site, equipment, or defect type) so performance can be evaluated across different conditions. This helps to ensure the model performs consistently and fairly in real-world situations.

When AI is used to support human decision-making, for instance product rejections or highlighting deviations, the annex stresses that the operator’s accountability must be defined and maintained like any other manual task.

Acceptance Criteria: Setting the Bar

Just like equipment validation, AI models must meet measurable performance metrics that are defined before testing begins. These metrics include accuracy, specificity, or F1 score, depending on the model’s role. Setting these expectations early helps to ensure that automation genuinely enhances quality and efficiency, rather than introducing new sources of uncertainty.

Testing and Data Independence

Annex 22 introduces detailed expectations for test data for how AI models should be tested:

- It must represent the full range of conditions the model will face.

- It should include all relevant subgroups and rare cases.

- Labels must be verified ideally by multiple experts or validated methods.

- Any cleaning or exclusions must be justified and documented.

A key concept is data independence. The same data must never be used for both training and testing. The annex even recommends procedural and access controls to prevent overlapping, ensuring test results are unbiased and reliable. Finally, it’s important to note that staff involved in test data should not participate in model training. This segregation of duties borrowed directly from GMP reinforces the integrity and reliability of AI validation processes.

Explainability and Confidence

AI can’t be a black box, under GMP. Annex 22 emphasizes the importance of explainability: the ability to understand why a model made a particular decision. Techniques such as Shapley Additive Explanations (SHAP) or Local Interpretable Model-Agnostic Explanations (LIME) can highlight which data features influenced an outcome, such as why a product was rejected. Reviewing these features helps confirm that the model is relying on the right factors not on the hidden correlations or irrelevant data. Each model should also log a confidence score for each prediction. If confidence is low, the system should flag the result as “undecided” rather than risk an unreliable call. This prevents blind automation and supports human oversight.

Operation and Ongoing Control

Once an AI model passes testing, it enters its operational lifecycle but that doesn’t mean the job is done. Annex 22 requires that models, their systems, and associated processes all fall under change control and configuration management. Any change to the model, software, or input conditions must be evaluated to determine if re-testing is needed.

Performance monitoring should continue throughout use to detect data drift or degraded accuracy. Input data should also be checked regularly to ensure it still falls within the model’s defined “intended use.” Where a human-in-the-loop is part of the process, ongoing review records must be maintained in line with GMP documentation standards.

A Framework for Trustworthy AI

Annex 22 doesn’t aim to slow innovation, it aims to make AI trustworthy, transparent, and traceable. By applying familiar GMP concepts to the AI lifecycle, EMA has given the industry a clear path forward. The annex recognizes that AI can add tremendous value but only when it’s properly understood, tested, and controlled. For manufacturers, now is the time to get ready:

- Identify where AI or ML is already being used.

- Review your data integrity and model validation processes.

- Train QA and SMEs to engage confidently with AI systems.

At GxP-CC, we are passionate about helping pharmaceutical companies bring AI and ML innovation into a compliant, trusted framework. Here’s how we can support you:

- Lay the Right Foundation, GxP AI Governance

We help you lay the groundwork for compliant AI/ML operations creating clear governance structures, validation templates, and data integrity controls aligned with FDA, EMA, and ISPE guidance. - Turn Compliance into an Enabler, GxP AI Validation

Our team turns complex regulatory expectations into practical, risk-based validation strategies so your AI systems stay compliant without slowing down innovation. - Keep Your AI Audit-Ready, GxP AI Health Check

Through independent assessments and gap analyses, we help you identify risks early, strengthen governance, and stay ready for any inspection or audit.

As the life sciences industry becomes increasingly digital, Annex 22 offers exactly what GMP has always promised a framework for quality through understanding and control. The future of GMP won’t be human versus AI; it will be humans and AI working together to make medicines safer, smarter, and more reliable.

References

[1] Huanbutta, K., Boonme, P., & Khuntontong, P. (2024). Artificial intelligence-driven pharmaceutical industry: A paradigm shift in drug discovery, formulation development, manufacturing, quality control, and post-market surveillance. European Journal of Pharmaceutical Sciences, 195, 106723. https://doi.org/10.1016/j.ejps.2024.106723

[2] European Commission. (2025). Draft – EU GMP Annex 22: Artificial Intelligence [Consultation guideline]. Retrieved from https://www.gmp-compliance.org/files/guidemgr/mp_vol4_chap4_annex22_consultation_guideline_en.pdf

[3] European Commission. (2011). EudraLex – Volume 4, Annex 11: Computerised Systems. Brussels, Belgium: European Commission. Retrieved from https://health.ec.europa.eu/system/files/2016-11/annex11_01-2011_en_0.pdf